04 Mar 2024

I remember distinctly the first time I held a Lucy Ellmann book,

unboxing the slim volume in the basement of the bookstore in which I

was working in 2003. Initially seduced by the cartoonish suggestion of

smut on its cover, I suspected that this was not entirely a serious

work. The unusual stylistic conceit that was apparent from leafing

through the first pages seemed to confirm this, though there was the

suggestion of something more to it, a blend of the irreverent and

existential. I had to have it, and that copy never made it to the shop

floor. I was so enthused with it that I almost immediately sent my

copy, via a somewhat dubious inter-branch transfer, to a bookseller

friend across the country, and if my unreliable memory serves this was

also the start of a torrid love affair between us, which, though

vulgar of me, I only mention to illustrate the potency of Ellmann’s

writing. I got everything else I could find in print, eagerly awaited

her next release, and then . . . nothing happened for a while. And so

I was surprised discovering in 2022, isolated mid-pandemic, in an

effort to reconnect with the reading habits of my younger self, that

Ellmann had written a 1000 page stream of consciousness novel,

endorsed by my alma mater no less (ah, Goldsmiths, still a reliable

champion of so many things I hold dear), had got the attention of the

Booker lot even, the panel for which I assume far too timid to promote

such an outlandishly ambitious work into its coveted first prize.

I picked it up shortly before my father passed away this year. Its

themes of grief and parental bonds of course made it an apt companion,

and it wove its way into, became an essential part of my own grieving

process. Its length and narrative style intimidated me at first, but

my fears were soon allayed once I made the commitment to start it. It

was, contrary to my projections, surprising in its accessibility.

Literature, Peter Kivy asserts, is also a performance art, albeit one

in which we most frequently perform out the meaning to ourselves. I

quickly found that I needed a more compelling performance to do the

work justice. I was so charmed by the dearness of the narrator which

seemed to evoke my same fondness for Philippa Dunne in my head. In the

madness of grief I even sought out Dunne, in the hope that she would

have a Cameo or some such, to read sections of the novel. I was

unsuccessful, but I settled by reading almost the entire work aloud,

to myself, in my best (bad) impersonation of a sweetly fragile,

middle-aged Irish mum that Dunne plays so well in both Motherland

and The Walshes, in spite of the knowledge that the narrator is from

New England. It did afford me though a kind of softness that is not so

readily available in my own voice, and one more willing to embrace the

flights of whimsy, pathos and daftness that the narrator

invites. Through reading aloud, I felt at times almost that I had

become, if only momentarily, her. And I’m sad that I’m now done with

the book, it’s over now and I have to enter some new phase of life and

loss without her.

It is curious to me that the narrator frequently talks about the stories

from Hollywood films from the point of view of the actors who played

in them, as if the characters in the stories were really them. I

wonder to what degree this hints at Ducks also being a

semi-autobiographical novel. It would be pointless to labor over this,

though there are some undeniable at least superficial similarities

with the people in the novel and aspects of the author’s family life

that are in the public domain. Either way, there is something

distinctly authentic and heartfelt in its being what appears to me a

1000 page lover letter to the author’s mother.

Over the course of those pages I think I have come to know more about

this fictional character than any other, that she has confided so much

in me, details that she may not even have revealed to any other person

in the world of the novel, things she may not even know herself. She

is polite to a fault, she does not swear, dodges obscenity and

intrusive erotic thoughts (perhaps uncomfortably the most overtly

erotic portion of the novel concerns the interactions of two mountain

lions). That the novel does not indulge the kinds of impulses more

readily associated with the stream of consciousness novel with abandon

is refreshing. She is not quite repressed but rather wishes to forget

all that is most ghastly about human frailty - namely a sexualized

conception of self in the wake of life-changing illness, and the

echoes of her own mother’s devastating decline. She is quite unafraid,

conversely, to stare down the most appalling aspects of American

history, right up to the present day. She is a mirror for the mess

America is in, and a hint at its salvation - mothers and motherhood

(the quotation from Tsutomu Yamaguchi, the Japanese marine engineer

who survived both the Hiroshima and Nagasaki bombings, that “the only

people who should be allowed to govern countries with nuclear weapons

are mothers, those who are still breast-feeding their babies”,

included at the end of the book, seems to cement this idea). She is

concerned with the right way to do a lot of things (baking, color

combinations, purple martin house maintenance), a kind of distillation

of the wisdom of many Ellmann mothers no doubt. She seems to have at

once exquisite taste and is yet doggedly unfussy. She has memorized a

litany of massacres and headlines. There is something charming about

her little grudges and her going back and forth, trying to see

everyone from both sides. I love her dearly more than any other

character I’ve known.

Though it has been widely praised, it has also attracted some I think

unfair criticisms. Kirkus Reviews feels it “could have made its

point in a quarter the space”, and Nick Major, writing in The

Herald, “can’t say for certain what this novel’s all about.” I’m only

providing these here because I feel they say so much about what I find

wrong with the literary establishment in general. At the risk of

sounding crass, there is something positively Freudian about mens’

need for a novel to have a single point or for its being over as

quickly as possible. It contains, as Walt Whitman says, multitudes. It

is a novel surging with ideas. Narratives undulate, appearing at first

barely visible on the horizon, persistently swell and gain force each

time they come round again before crashing onto the shore of awareness

with drama scarcely imaginable from its point of origin. The narrator

frequently derides herself as a “whirling dervish” yet this is

precisely the quality that so successfully drives the emotional heart

of this more womanly novel. “recoil and leap, leap and recoil”.

“I think these are […] rules, we are just used to believing that you

can’t do that, you’re not allowed to keep a sentence going, but

actually it turned out it is possible, and why not?” Ellmann says in

her promotional video for The Booker Prize. For better or worse I

may not have dared to write a review of this or any other book so

unashamedly from my own subjective experience without her influence. I

hope she is right about women and motherhood, and that the future is

more feminine and motherly. I sorely miss those qualities which

pervaded my consciousness for all too brief a time this year. I hope

that they did change me in the process, and I am better for their

influence.

20 Jan 2021

Like countless others, I learned Ruby on Rails (and a great deal more) from Michael Hartl’s Ruby on Rails Tutorial. Though I haven’t kept up with all the changes in the most recent revisions, I know that back in 2012 things were a bit different. In particular, I learned that it was The Done Thing to eschew the testing framework that came with Rails in favor of RSpec, and to toss aside the fixtures library in favor of something called Factory Girl (since renamed Factory Bot). Now I believe Hartl has since abandoned much of that in preference to using only the things that come with Rails by default. I’m not that surprised - I recall this being a lot to learn all at once, and it felt awkward to be fighting from the outset the framework I was trying to learn - whose very ethos is the following of conventions it sets forth - before I could even grasp the nuances of why I might be doing this.

As influential as Hartl’s tutorial may have been, this change in the tide it seems has failed to make much of a splash in the Ruby community at large. I’ve only ever once collaborated on a Rails project that followed all of the conventions. Almost every project I’ve worked on professionally has been of the Rails/RSpec/Factory Bot variety. In this post I want to expose some of the assumptions underpinning these choices, and to question if these assumptions are still valid. I’ll also suggest that the Rails defaults are not merely a better choice for beginners, as Hartl indicates, but may be better for everyone all round.

I don’t want to dwell too much on the relative merits of RSpec. I think it is a fine testing framework, I’ve contributed to it, and I especially love its extensive expectations library, which I’ve also written about before. I also love Minitest. Though I’ve used it less, I am now using Minitest for all my new personal projects. I won’t say that one is better than the other. Maintainer Sam Phippen describes RSpec as a tool that “excels at testing legacy code” and this bears out at least in my experience. I’d prefer to use RSpec to retrofit a test suite to a legacy codebase because of the ease with which you can reach through objects that are tightly coupled using the full flexibility that Ruby provides. I’d prefer to use Minitest for greenfield development precisely because of the resistance it provides to creating tightly coupled code in the first place.

Factory Bot, on the other hand, I think invites some scrutiny. thoughtbot sold Factory Bot entirely on the basis of addressing the Mystery Guest problem . It trades on allowing you to write clearer tests at the expense of having to create and rollback every test fixture you need every time, starting every test with a pristine, blank database. This can be a major cause of test slowness, and it is worth pointing out that test slowness in general has been a common complaint for as long as I have been using Rails. You would think then that the Mystery Guest problem had better be a significant one for this to be a good trade off.

For anyone unfamiliar, the Mystery Guest problem is defined as follows:

The test reader is not able to see the cause and effect between fixture and verification logic because part of it is done outside the Test Method.

In a vanilla Rails application, this problem commonly manifests in the use of Rails’ persistent fixtures:

RSpec.describe User do

it "has a full name" do

expect(users(:alice).full_name).to eq("Alice Anderson")

end

end

To the test reader, it’s not clear where the user’s full name comes from, or from what data it is derived. To contrast, with Factory Bot:

RSpec.describe User do

it "has a full name" do

user = create(:user, first: "Alice", last: "Anderson")

expect(user.full_name).to eq("Alice Anderson")

end

end

In this example it is immediately clear to the reader the relationship between the data that was passed to the fixture and the derived outcome of the full name text.

The Mystery Guest is an XUnit antipattern, and the use of factories facilitates a better XUnit pattern, namely the Four-Phase Test. The idea behind it is that every test should tell a story - one that has a beginning, a middle and an end (and sometimes an epilogue):

RSpec.describe User do

it "can change address" do

# setup

user = create(:user)

# exercise

user.first = "Alice"

user.last = "Anderson"

# verify

expect(user.full_name).to eq("Alice Anderson")

# teardown

user.destroy!

end

end

Not all tests require all four phases, and can frequently omit some of the steps:

RSpec.describe User do

it "can change address" do

# setup

user = create(:user, first: "Alice", last: "Anderson")

# exercise

# nothing to do here....

# verify

expect(user.full_name).to eq("Alice Anderson")

# teardown

# nothing to do here - the test framework takes care of tearing down fixtures

end

end

It is only natural then that thoughtbot feel strongly that you should also follow other XUnit test patterns when you write your specs. The big problem here is that RSpec is not an XUnit-style test framework, and it is my observation that most people do not follow XUnit best practices, preferring to write highly idiomatic RSpec code instead:

RSpec.describe User do

let(:first) { "Alice" }

let(:last) { "Anderson" }

subject(:user) { create(:user, first: first, last: last) }

# 100s

# of

# lines

# of

# test

# code

describe "#full_name" do

its(:full_name) { should eq("Alice Anderson") }

end

end

As you can see, we have now thwarted our attempt at addressing the Mystery Guest problem simply by writing idiomatic RSpec code. It’s a particularly egregious example that can be mitigated to some degree but it’s clear that the Four-Phase Test pattern does not map neatly onto RSpec’s DSL. RSpec is not an XUnit test framework and it is not its goal to facilitate use of all the XUnit patterns.

If the authors of Factory Bot recommend that we discard parts of the RSpec DSL in the pursuit of more readable, XUnit-style tests, it is surprising that they did not recommend foremost the use of an XUnit test framework, such as Minitest, instead:

class TestUser < Minitest::Test

def test_full_name

user = create(:user, first: "Alice", last: "Anderson")

assert_equal("Alice Anderson", user.full_name)

end

end

This is arguably the most natural way to write this test in Minitest. Though it’s possible to muddle its readability there are far fewer opportunities to do so.

If then we find our test suites still rife with Mystery Guests in spite of using Factory Bot, we have gained nothing and only made our tests slower with all the extra overhead.

For this reason I recommend that if you want to use Factory Bot, to use it with an XUnit-style framework such as Minitest. It is much harder in my view to enforce the Four-Phase Test style in your organization when this goes against the grain of the RSpec DSL. I also recommend only using Factory Bot’s most basic features, avoiding for instance traits, which take details of the fixture setup away from the test body and abstract them into concepts defined in the factory definition - undercutting the original aim to eliminate the Mystery Guest problem.

Conversely, if you are not tied to using Factory Bot, consider using Rails’ fixtures instead. If you are already writing idiomatic RSpec and therefore most likely tolerating a certain amount of Mystery Guest pain, there is a good chance that you won’t feel any new discomfort by doing this alone. And your test suite will almost certainly be faster.

But honestly, why not Minitest and Rails’ fixtures? This is precisely the setup that Rails provides you with right from the moment you type rails new. It’s also the one that Michael Hartl teaches in his tutorial. It’ll make both learning Rails and onboarding people onto your project more accessible by reducing the number of things they have to learn just to get started. And it’s much simpler to be able to say that you follow all of the Rails conventions as you point to its documentation, than to have to explain all the individual choices you made when departing from the defaults. You’ll have faster tests, with less setup, because you won’t have to recreate the world for every integration test that you run. I don’t necessarily love everything about this approach. But that’s also true of other parts of Rails. And I still choose Rails because of the experience of ease I feel when I just follow the conventions that it has established. And that goes for writing tests too.

My experience right from starting with the Ruby on Rails Tutorial, through everything I’ve worked on professionally, has been that every project has had some variation of this complicated setup I learned back in 2012. If this is your experience too, I invite you to experiment in your next Rails project with the default test framework. Keep an open mind, play around with it, notice the differences, allow yourself to feel any of the pain points that come up (and perhaps any pleasant surprises) for yourself before evaluating. If you find yourself still preferring your bespoke setup, you may walk away with a richer understanding of the problems those tools were trying to address, and maybe you’ll write better tests for it. Or, like me, you may decide that whatever received wisdom we had back in 2012 doesn’t suit us as well in 2021, and it’s time for a change.

13 Jan 2021

I turned the last page on the wonderful Mason & Dixon, by Thomas Pynchon last night.

I’ve been reading this for the best part of a year, and, in another sense, for over ten years (I was reading this book, stuffed with Greyhound tickets and other bookmark-shaped mementos, when I met my wife - though I lost the thread about 200 pages in, something I have done more than once before reading Pynchon - and I was approaching its final chapters on the day that she moved out).

This is the book that taught me how to read again, that demanded I slow down to take everything in, against the at times overwhelming feeling that I might never finish it, or that I was not smart enough to understand it. It’s been a hard lesson accepting my reading difficulties. Trying to change the way that I read has only done harm to my understanding and enjoyment of fiction, why I’ve barely read any in . . . the last ten years.

It’s hard too to imagine that a book could sustain my interest and stay alive in my memory and imagination for this long. But . . . it has. It’s frequently been hard work, and more than I could deal with at times, having no attention left to give it at the end of the day, sometimes for weeks at a time. But it’s always been rewarding.

Pynchon has the best prose of any author I’ve read that’s alive today (though please don’t judge that endorsement on the quality of mine), coupled with an indefatigable imagination, describing events both meticulously researched in historical detail yet somehow also populated with talking dogs, sentient chronometers, a golem, giant vegetables, ghosts, mechanical ducks, elves and gnomes and Popeye the Sailor Man. I thought it would never be over, and now it hurts to put it down.

06 Aug 2018

Some years ago when I was living in an Ashram in rural Virginia, I met

a wise, old man. I knew he was a wise, old man because he embodied

certain stereotypes about wise, old men. First, he was a Gray-Bearded

Yogi. Before this he was a New Yorker and a practicing Freudian

Psychoanalyst. Sometimes he would say a lot of interesting and funny

things, and at other times he would smile and nod and say nothing at

all. I can’t remember if he ever stroked his beard.

One day he said to me, “Asoka,” (the name under which I was going at

the time). “Asoka,” he said, “do you know what is the single driving

force behind all our desires, motives and actions?” I thought about

this for some time. I had my own ideas but, knowing he was a Freudian,

suspected that the answer was going to be something to do with the

libido.

“You probably suspect that the answer is going to be something to do

with the libido,” he said. “But it’s not.” I listened patiently. “It’s

the need … to be right.” I laughed. While I wasn’t totally surprised

not to have got the right answer, this particular one for some reason

blew me away. I wasn’t prepared. I had never framed human nature in

those terms before.

I wouldn’t expect anyone else to have the same reaction. I suspect

others would find this to be either obvious, banal, or plainly wrong,

and if this is you, I don’t intend to convince you otherwise (there

might be a certain irony in trying to do so). What I want to do

instead is document what became for me a personal manifesto, and a

lens through which I began to look at the world. As a lens, you are

free to pick it up, take a look through it, and ultimately discard it

if you wish. But I rather like it a lot.

What happened that day was really only the start of a long

process. Eventually I would see that a preoccupation with being right

was essentially an expression of power and that rectifying (from the

Late Latin rectificare - to “make right”) was about exerting power

over others. I would also see that this preoccupation had perhaps more

to do with the appearance of being right, and that the cost of

maintaining it would be in missed opportunities for learning. And I

would also see that, while the rectification obsession was not a

uniquely male problem, there seemed to be a general movement of

rightness from that direction, and we would do well to examine that

too.

I was the principle subject of my examination, and it has become a

goal to continue to examine and dismantle the ways in which I assert

“rightness” in the world.

A little bit about myself

Allegedly I come from a long line of know-it-alls. Unsurprisingly,

it’s a behavior that passes down the male side of my family. Of

course, I don’t really believe this is a genetic disposition, and it’s

easy to see how this might work.

As a child I remember my family’s praising me for being ‘brainy’. They

gave me constant positive feedback for being right. As long as I

appeared to be right all the time I felt like I was winning. In

actuality, though, I was losing. I learned to hide my ignorance of

things so as never to appear wrong. I’ve spent most of my life missing

answers to questions I didn’t ask. I became lazy, unconsciously

thinking that my smarts would allow me to coast through life.

Once I left School, and with it a culture principally concerned with

measuring and rewarding rightness, I had a hard time knowing how to

fit in or do well. It would take years of adjustments before I felt

any kind of success. Whenever something became hard, I’d try something

new, and I was always disappointed to find that opportunities were not

handed to me simply because I was ‘smart’. When I didn’t get into the

top colleges I applied to it devastated me. I would later drop out of

a perfectly good college, get by on minimum wage jobs when I was lucky

enough even to have one, fail to understand why I didn’t get any of

the much better jobs I applied for.

I stumbled upon a section in Richard Wiseman’s 59 Seconds: Think a

Little, Change a Lot that claimed that children who are praised for

hard work will be more successful than those that are praised for

correctness or cleverness (there is some research that supports

this). It came as a small comfort to learn that I was not alone. More

importantly, it planted in me a seed whose growth I continue to

nurture today.

I still don’t fully grasp the extent to which these early experiences

have shaped my thinking and my behavior, but I have understood it well

enough to have turned things around somewhat, applied myself, and have

some awareness of my rectifying behavior, even if I can’t always

anticipate it.

It is one thing to intervene in your own actions toward others, to

limit your own harmful behavior. It is quite another when dealing with

the dynamics of a group of people all competing for rightness. What

I’m especially interested in currently is the fact that I don’t

believe I’ve ever seen such a high concentration of people who are

utterly driven by the need to be right all the time as in the tech

industry.

Let’s look at some of the different ways that being right has manifest

itself negatively in the workplace.

On Leadership and Teamwork

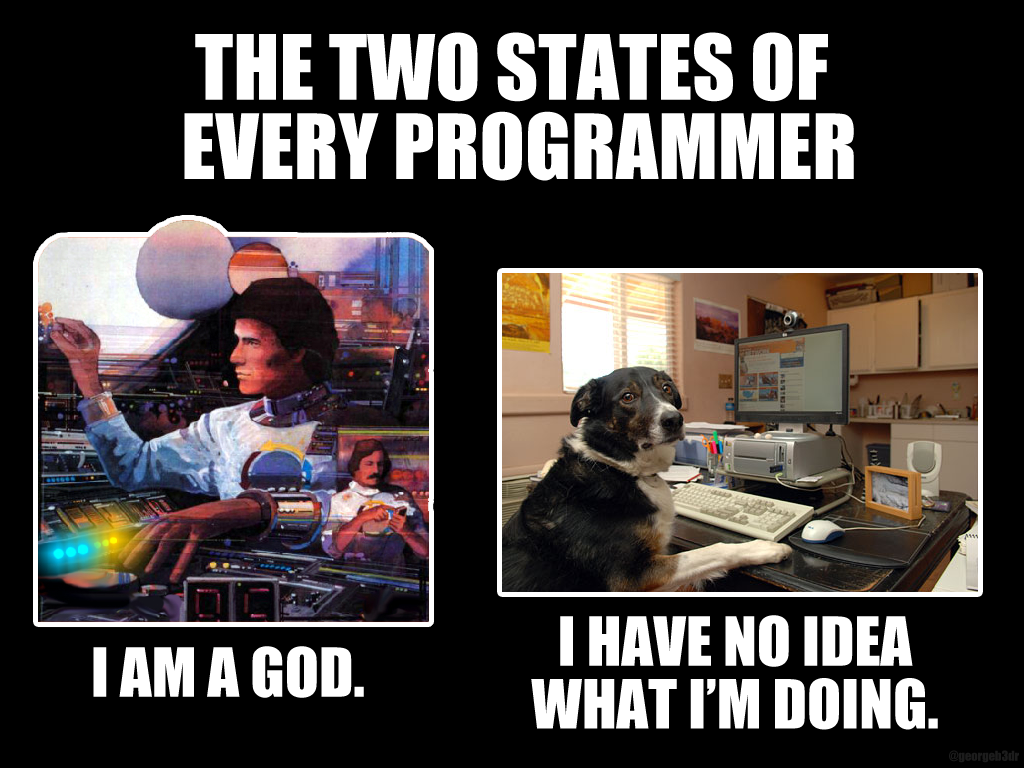

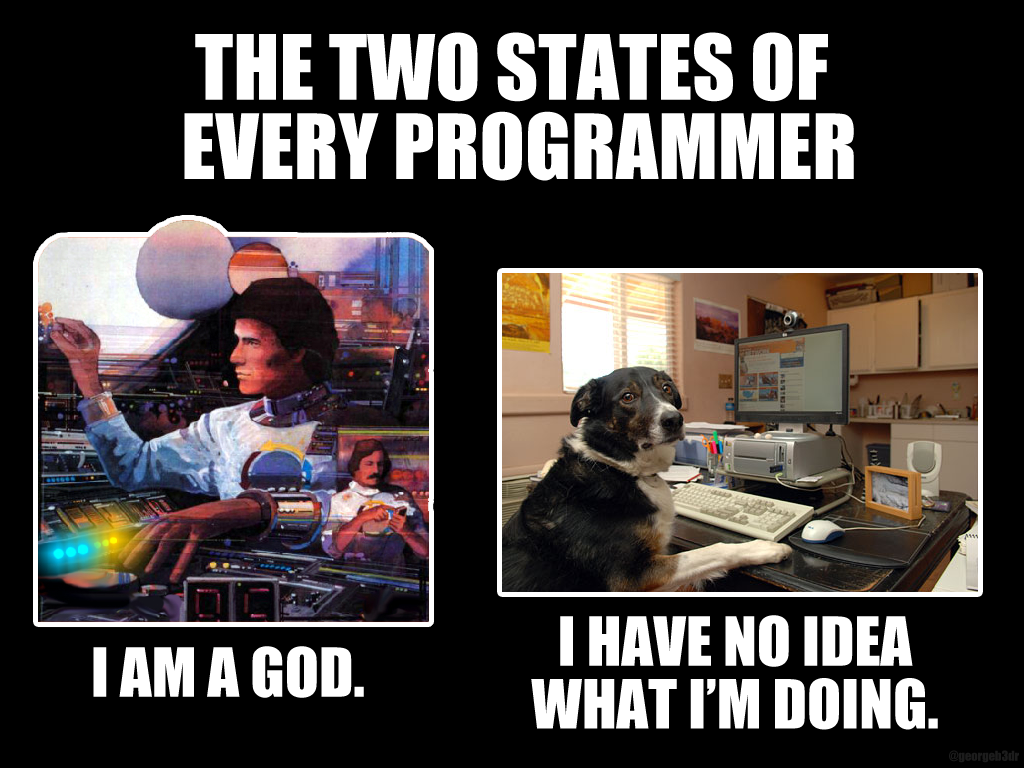

There is a well-known meme about the experience of being a programmer,

and it looks like this:

There is some truth to this illustration of the polarization of

feelings felt through coding. However, it is all too common for

individuals to wholly identify with one or the other. On the one side

we have our rock stars, our 10x developers and brogrammers. On the

other we have people dogged by imposter syndrome. In reality, the two

abstract states represent a continuous and exaggerated part of us

all. Having said that, I believe that everyone is in the middle, but

much closer to the second state than the first. All of us.

In my personal experience I have felt a strong feeling of camaraderie

when I’m working with people who all humbly admit they don’t really

know what they’re doing. This qualification is important - nobody is

saying they are truly incompetent, just that there are distinct limits

to their knowledge and understanding. There is the sense that we don’t

have all the answers, but we will nonetheless figure it out

together. It promotes a culture of learning and teamwork. When

everyone makes themselves vulnerable in this way great things can

happen. The problem is that it only takes one asshole to fuck all that

up.

When a team loses its collective vulnerability as one person starts to

exert rightness (and therefore power) downwards onto it, we lose all

the positive effects I’ve listed above. I’ve seen people become

competitive and sometimes downright hostile under these

conditions. Ultimately it rewards the loudest individuals who can make

the most convincing semblance of being right to their peers and

stifles all other voices.

This is commonly what we call “leadership”, and while I don’t want to

suggest that leadership and teamwork are antagonistic to each other, I

do want to suggest that a certain style of leadership, one concerned

principally with correctness, is harmful to it. A good leader will

make bold decisions, informed by their team, to move forward in some

direction, even if sometimes that turns out to be the wrong one. It’s

OK to acknowledge this and turn things around.

On Productivity

A preoccupation with being right can have a directly negative effect

on productivity. One obvious way is what I will call refactoring

hypnosis - a state wherein the programmer forgets the original intent

of their refactoring efforts and continues to rework code into a more

“right” state, often with no tangible benefit while risking

breakages at every step.

Style is another area that is particularly prone to pointless

rectification. It is not unusual for developers to have a preference

for a certain style in whatever language they are using. It is

interesting that while opposing styles can seem utterly “wrong” to the

developer it seems that this is the area of software development in

which there are the fewest agreements over what we consider to be good

or “right”. In Ruby there have been attempts to unify divergent

opinion in the Ruby Style Guide but it has been known to go back

and forth on some of its specifics (or merely to state that there are

competing styles), and the fact that teams and communities eventually

grow their own style guides (AirBnb, GitHub, thoughtbot, Seattle.rb)

shows that perhaps the only thing we can agree on is that a codebase

be consistent. Where it lacks consistency there lie opportunities to

rectify, but this is almost always a bad idea if done for its own

sake.

Finally, being right simply isn’t agile. One of the core tenets of the

Agile Manifesto is that while there is value in following a plan,

there is more value in responding to change. This seems to suggest

that our plans, while useful, will inevitably be wrong in crucial

ways. An obsession with rightness will inevitably waste time -

accepting that we will be wrong encourages us to move quickly, get

feedback early on and iterate to build the right thing in the shortest

time.

On Culture

As I’ve asserted above, none of us really knows what we are doing

(for different values of “really”), and indeed this sentiment has been

commonly expressed even among some of the most experienced and

celebrated engineers. I think that there is both humor and truth in

this but, while I believe the sentiment is well-intentioned, words are

important and can sometimes undermine what’s being expressed

here. I’ve seen people I look up to utter something of the form, look,

I wrote [some technology you’ve probably heard of], and I still do

[something stupid/dumb] - what an idiot! This doesn’t reassure me at

all. All I think is, wow, if you have such a negative opinion of

yourself, I can’t imagine what you’d think of me.

Perhaps instead of fostering a culture of self-chastisement we can

celebrate our wrongness. We know that failure can sometimes come at

great cost, but it’s almost always because of flaws in the systems we

have in place. A good system will tolerate certain mistakes well, and

simply not let us make other kinds of mistakes. A mistake really is a

cause for celebration because it is also a learning, and celebrating

creates an opportunity to share that learning with others while

simultaneously destigmatizing its discovery. I am happy that my team

has recently formalized this process as part of our weekly

retrospectives - I would encourage everyone to do this.

One of the most harmful ways I’ve seen the rectification obsession

play out is in code reviews. The very medium of the code review

(typically GitHub) is not well set up for managing feelings when

providing close criticism of one’s work. We can exacerbate this with

an obsession with being right, especially when there are multiple

contenders in the conversation.

I have been on teams where this obsession extends into code review to

the point where, in order for one to get one’s code merged, a reviewer

has to deem it “perfect”. Ironically, this seems less an indicator of

high code quality in the codebase and more of the difficulty of ever

making changes to the code subsequently. Having your work routinely

nitpicked can be a gruelling experience - worse so when review take

place in multiple timezones and discussions go back and forth over

multiple days or even weeks. Personally, I’ve been much happier when

the team’s standard for merging is “good enough”, encouraging

iterative changes and follow up work for anything less crucial.

It is hard to overstate the importance of language when looking at

these interactions. There has been much talk recently about the use of

the word “just” (as in “just do it this way”) in code review, and I am

glad that this is undergoing scrutiny. It seems to suggest that not

only is the recipient wrong, but deeply misguided - the “right” way is

really quite simple. This serves to exert power in a humiliating way,

one that minimizes our effort and intellect along the way. Of course,

there are countless more ways that we can do harm through poorly

chosen words, but I am glad that we have started to examine this.

On Mansplaining

It is telling to me that the standard introduction to any

mansplanation, well, actually…., is almost the ultimate expression

of rectification. It is appropriate that we have identified this

behavior as an expression of masculine insecurity - the man uses sheer

volume and insistence to counter a position he poorly

understands. More innocent mansplanations still work in the same way -

without contradicting a man may simply offer some explanation (I am

right!), believing this to be helpful to the person whose ignorance he

has assumed.

I am aware that there could be some irony in trying to frame the whole

of this phenomenon in terms of my manifesto, but it is not my

intention to do so. It is rather that mansplaining reveals a great

deal about the harm done and intentions behind rectifying behavior.

Doing the Right Thing

I do not want to suggest a feeling of smug superiority - just about every

harmful behavior I have described above I have also engaged in at some

point. I know I will continue to do so, too. But I want this to be

better, and I want to work with people who are also committed to these

goals.

Looking back to the start of my journey, I have to question now the

intent of the wise, old man in his original assertion about human

behavior. Was this yet another example of some unsolicited advice from

a person who exploited their maleness and seniority to add more weight

to their pronouncements than perhaps they deserved? Is this all that

wise, old men do? Almost certainly.

As it turned out, I did not wholly embrace it as truth (none of the

above makes any claims to social science or psychology), but neither

rejected it wholesale. I discovered that while it may not be literally

true, I might arrive at smaller truths by entertaining it as an idea

(the contradiction is probably what made me laugh). I’m grateful that

it was shared with me.

That there is nothing wrong with being right. Rather, it is the

desire to be right that colors our judgment, that leads us on the

wrong path. Being right is also not the same thing as doing the right

thing. And I want to focus my efforts now on this, while trying to

free myself from the tyranny of being right.

19 Feb 2018

When I started my first job I was programming in Ruby sans Rails,

and knew I had to get up to speed quickly. I purchased Russ Olsen’s

Eloquent Ruby (2011) and read it cover to cover, though I struggled

a bit towards the end. I tried some things out, and then I read it

again. Then I played around some more. I learned the joys of

metaprogramming and then burnt my fingers. I went back and read it a

third time, now with the understanding that some of the things in the

back were dangerous.

Up until Eloquent Ruby, the resources I had used to learn all had

the same agenda: how to tell the computer to do stuff. In other words,

they would describe the features of things without spending too much

time on the attitudes toward them. And it is much harder to do the

latter.

The concept of Eloquent Ruby came as a revelation to me at the

time - the idea that there were not just rules that make up a

language, but idioms too, attitudes that might even change over

time. I loved the idea that I could learn to speak Ruby not just

adequately but like a native.

By this time I felt bold enough to call myself a Rubyist, and I owed

much of the enthusiasm I felt toward the language, and the success I

had early on in my career, to this book. I bought another of Olsen’s

books on design patterns and read it cover to cover, again, multiple

times. I was ambitious and knew that “good” programmers had experience

and expertise working in different programming paradigms while still

not sure in what direction I would go. So I learned with great

interest that he was either working on, or had at least declared an

intention to write, a book about Clojure.

I had no idea at this point what Clojure or even Lisp was, but the

author had gained my trust enough for me to want to read about his new

book, whatever it was about.

And of course I had no clue at the time that this book would be in the

pipeline for years. I understand; these things take time. But, being

impatient, when I felt confident enough to start learning another

language, I decided to go ahead with Clojure anyway.

I have now played with it for about 3 years, have pored through some

books that were good at what they set out to do (exhaustively survey

the features of the language), have built some things of a certain

size. Alas, not getting to code and think in my second language every

day, I have never felt that I really “got” Clojure, that I really knew

it in the same way that I knew Ruby. I could not properly call myself

a Clojurist (do they even call themselves that? See, I don’t know).

So I was pretty psyched when I learned that Olsen’s book was nearing

completion, and that its title was, perfectly, Getting Clojure. When

it came out in beta form, I did something I almost never do - I bought

the eBook (I typically like to do my reading away from the computer).

And it has not disappointed. I am so happy that all the elements of

Olsen’s style are present and on top form - his gentle humor, his

writing with compassion for the learner. He knows crucially what to

leave out and what to explain deeper, to illustrate. There are

examples, contrived, yet so much more compelling than most (it’s hard

to formulate new examples that are sufficiently complex yet small and

interesting enough to sustain interest). There are lots of details on

why you might want to use a particular thing, or more importantly,

why you might not want to - in Olsen’s words “staying out of

trouble” - details that are so vital to writing good, idiomatic code

in whatever language. And there are examples of real Clojure in use,

often pulled from the Clojure source itself, that not only illustrate

but give me the confidence to dive in myself, something that wouldn’t

have occurred to me alone.

It seems ironic that Getting Clojure isn’t the book I wanted way

back when I first heard about it, but it is the book that I need now.

I enjoyed going over the earlier chapters, cementing knowledge along

the way, forming new narratives that simplify the surface area of the

language while giving me the things I need to know to find out

more. And it gave me the confidence to dive way deeper than I thought

would be comfortable. For example, Olsen encourages you to write your

own Lisp implementation as an aside while he looks at the core of

Clojure’s. I went ahead and did this and am so glad that I did - I

feel like I have gained a much deeper understanding of computer

programs in general, something that may have been lacking in my not

coming from a Computer Science background.

I have no doubt that this book will appeal to many others from

different backgrounds, different places in their development. But I

can confidently say that if, like myself, you are self taught, or

don’t come from a “traditional” background, perhaps Ruby or a similar

dynamic language is your bread and butter but you are trying to expand

your horizons, if you need materials that focus on the learner and

building up understanding in a more structured way, Getting Clojure

gets my highest possible recommendation.